How to Build Secure AI System on Your Own Data: A Practical Guide for Tech Leaders

Learn how to build secure AI on your own data - architecture, use cases, ROI, and a 30-day rollout plan. A practical guide for CTOs and tech leaders.

1. Executive Summary: Why “AI on Your Own Data” Is the Next Competitive Edge

Most companies have already run small AI experiments. Few have turned them into secure, production-grade systems that work on their own data.

For tech leaders in 100–1500 FTE companies, the next competitive edge is clear:

- Use your internal data (not just the public internet) to power AI copilots and assistants.

- Keep sensitive information inside your perimeter while still benefiting from modern models.

- Turn AI from one-off experiments into infrastructure with clear ROI, observability, and guardrails.

This guide explains:

- What “AI on your own data” really means.

- How retrieval-augmented generation (RAG) fits into the picture.

- A step-by-step implementation plan your team can follow.

- High-impact use cases and economic impact.

- How Calljmp helps you build secure, data-aware AI systems as code.

2. Why Tech Leaders Are Moving to Secure AI on Internal Data

AI is no longer just a buzzword in your board deck. Your teams are already copy-pasting data into ChatGPT and other tools, jumping between Confluence, Notion, Jira, email, and ticketing systems to find answers, and spinning up one-off scripts and prototypes that quickly become hard to maintain.

The pain points are predictable: high-value people lose time hunting for information across scattered systems; many AI proofs of concept are launched but few deliver measurable, repeatable impact; and sensitive customer data and internal documents increasingly touch third-party tools that you don’t fully control, raising security and compliance concerns.

At the same time, the opportunity is obvious:

Use AI as a knowledge and workflow layer on top of your internal systems — while staying within your security and compliance boundaries.

That’s what “AI on your own data” is about: building a repeatable architecture where AI can read, retrieve, and reason over your proprietary data securely.

3. What “AI on Your Own Data” Actually Means (and Where RAG Fits)

In simple terms, AI on your own data means your assistants, copilots, and automations answer questions and perform tasks using your internal knowledge, not only what the model was trained on.

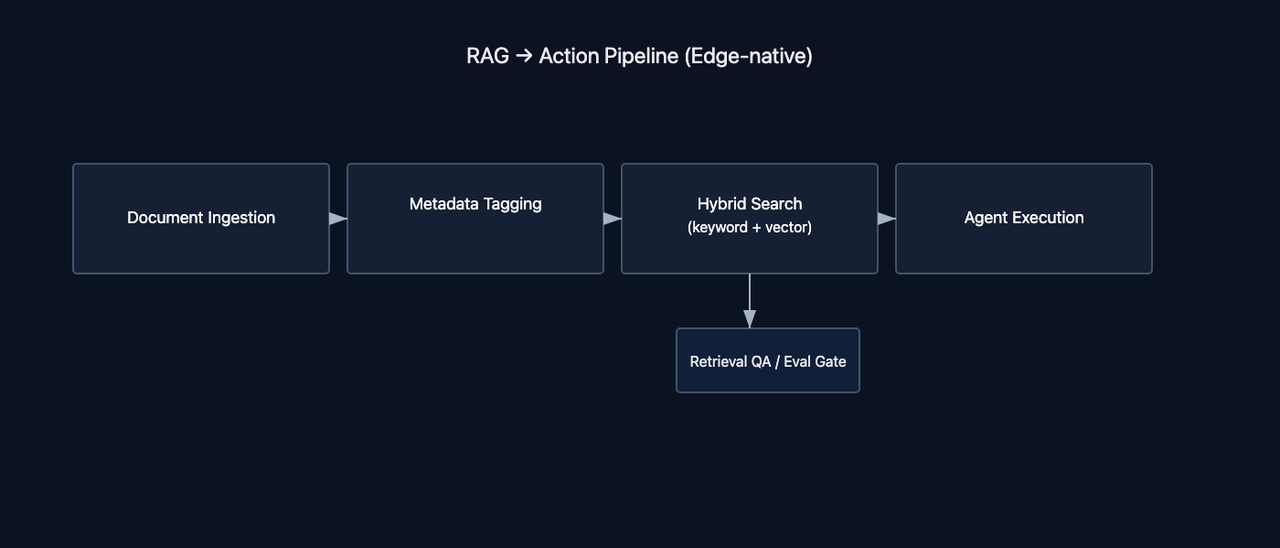

The dominant pattern behind this is retrieval-augmented generation (RAG). A user asks a question or triggers an action, the system retrieves relevant pieces of internal data (documents, records, tickets), and an AI model uses that retrieved context to generate a grounded, up-to-date answer.

This matters because:

- You don’t need to send your entire dataset to a public LLM.

- You don’t have to fine-tune a large model as the first step.

- You can control exactly what data is accessed and how.

For C-level roles, think of RAG as:

“Search + AI explanation” on top of your proprietary data, wrapped in your security model.

4. Core Principles for Secure AI on Proprietary Data

Before you invest in building AI on internal company data, it’s essential to align on a few architectural principles that ensure security, compliance, and long-term reliability.

4.1 Keep sensitive data inside your perimeter

All preprocessing and indexing of sensitive data should happen inside your environment:

- Your VPC / private cloud

- Your on-prem infrastructure

Calljmp’s ingestion pipeline is designed to run where the data lives: data is processed locally, not shipped to a vendor’s servers.

4.2 Store meaning, not raw content

Instead of persisting original documents in external systems, the data should be transformed into semantic representations that hold meaning but cannot be reverse-engineered into the source text. This process involves splitting documents into smaller chunks, converting them into embeddings with lightweight open-source models running inside your perimeter, and storing only those vectors in your own database. By retaining embeddings rather than full documents, you maintain the ability to perform rich semantic search while keeping sensitive content fully protected.

4.3 Separate ingestion, retrieval, and generation

A robust architecture keeps these layers distinct:

- Ingestion: Connect data sources, preprocess securely, create embeddings.

- Retrieval: Efficient semantic search over your vectors and metadata.

- Generation: AI model uses retrieved context to answer or act.

This separation simplifies governance, debugging, and evolution over time.

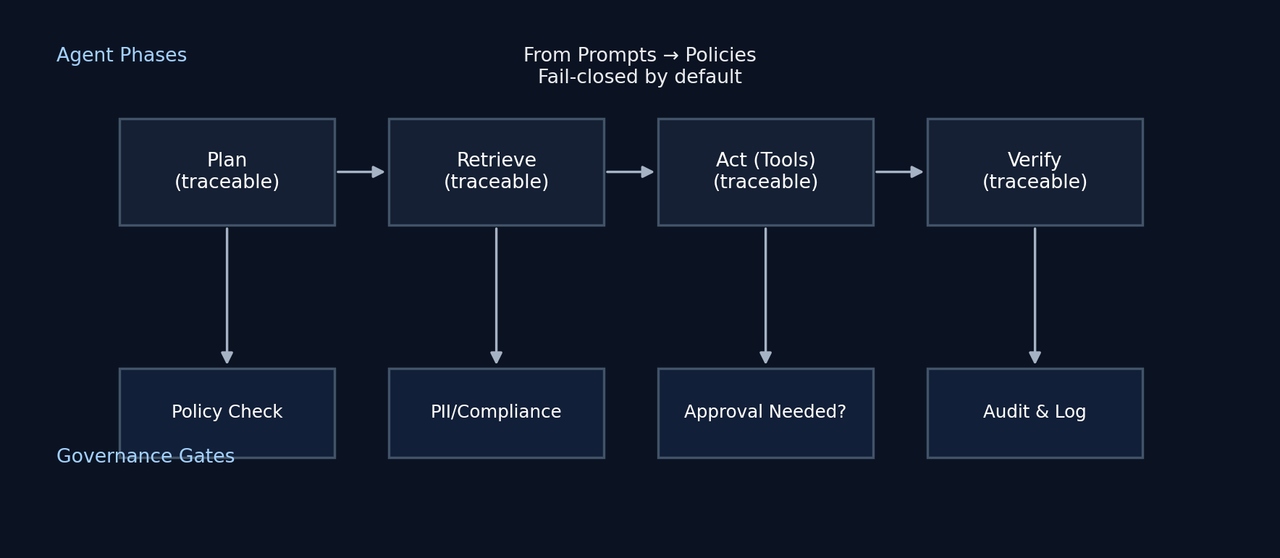

4.4 Observability and traceability by design

You’ll need:

- Full execution traces for each AI request.

- Logs, retries, and error handling.

- Metrics for latency, accuracy, and cost.

Calljmp’s agentic runtime captures these traces automatically and retains logs for a limited period to support debugging and audits.

4.5 Governance and access control

AI must operate within the same security and compliance boundaries as the rest of your infrastructure. That includes enforcing role-based access to sensitive data, applying explicit access policies, and maintaining complete audit logs that show who accessed what information and how it was used. Requests should be authorized, logged, and explainable, ensuring that AI becomes an extension of your governance framework rather than a blind spot.

5. Step-by-Step Guide: How to Implement Secure AI on Your Own Data

5.1 Step 1 – Align on business goals and success metrics

Start by choosing one or two focused use cases that deliver fast, visible impact. Common early wins include:

- An internal knowledge copilot for engineering, support, or sales

- A support-agent assistant that drafts answers using your internal docs

- An in-app copilot embedded directly into your SaaS product

Define success metrics upfront, such as:

- Reduced search time

- Faster ticket resolution

- Improved agent efficiency

- Higher customer satisfaction

Clear KPIs create alignment and help the team measure progress.

5.2 Step 2 – Map your data sources and classify sensitivity

Identify where valuable knowledge lives across the organization:

- Wikis (Confluence, Notion)

- Ticketing systems (Zendesk, Jira Service Management)

- Product documentation and manuals

- CRM and customer data

- Logs, runbooks, and internal tools

Classify each dataset as public, internal, confidential, or highly sensitive. This determines what can be safely included in the first iteration and what requires additional controls.

5.3 Step 3 – Design a secure ingestion and indexing pipeline

Your ingestion pipeline should run entirely inside your own environment (VPC, private cloud, or on-prem). The pipeline should:

- Connect to internal and external data sources

- Detect new or updated content automatically

- Split documents into optimized chunks

- Generate embeddings using local open-source models (E5, Instructor, Llama embeddings)

- Store only semantic vectors and metadata in your vector database

Raw documents remain in your systems and never leave your perimeter. Calljmp processes data in memory and discards it after embedding.

5.4 Step 4 – Build the retrieval and reasoning layer

Once your data is indexed, build the retrieval logic that powers AI reasoning:

- Semantic search across embeddings

- Metadata filters for precision

- Optional query rewriting for vague inputs

Connect retrieval to your chosen model:

- Open-source (fully private)

- Premium models like OpenAI or Grok (explicitly enabled)

Each AI call follows a clear pattern:

- User query → retrieve relevant chunks → model generates a grounded answer

In Calljmp, this entire flow is defined in TypeScript agents for full control.

5.5 Step 5 – Connect AI to internal and external interfaces

Expose AI where people work:

- Slack or Teams bots

- Internal dashboards or portals

- Support agent consoles

- In-app copilots for SaaS products

Calljmp agents can be invoked through:

- REST API

- SDKs

- Direct backend integration

This makes it easy to roll out AI to both employees and customers.

5.6 Step 6 – Add security, observability, and guardrails

Make the system production-ready with:

- Authentication and authorization

- Rate limits and quotas

- Detailed logs and traces for every request

- Error handling, retries, and workflow control

- Guardrails for sensitive actions or restricted queries

- Human-in-the-loop (HITL) approval paths

Calljmp provides built-in tracing, retries, metrics, and cost visibility.

5.7 Step 7 – Run a pilot, learn, and scale

Before rolling out broadly, start with a controlled pilot:

- Target one team or workflow

- Collect feedback and usage data

- Refine prompts, retrieval logic, and UX

- Validate impact against KPIs

Once stable, expand to more teams and use cases. This staged approach turns AI from scattered experiments into a reliable, organization-wide capability.

6. High-Impact Use Cases for Mid-Size Tech Companies

6.1 Internal Knowledge Copilot

- Unified search across docs, wikis, tickets, specs, and internal systems

- Faster onboarding and smoother knowledge transfer

- Reduced interruptions across engineering, support, and product teams

- Better decision-making with instant access to institutional knowledge

6.2 Customer Support and Success Assistant

- AI assistant for support agents that can:

- Suggest accurate answers based on internal documentation

- Link to relevant playbooks, past tickets, and product guides

- Summarize long conversations and propose next actions

- Self-service AI for customers to deflect simple tickets

- Improved consistency and speed in customer communication

6.3 In-Product SaaS Copilot

- Context-aware help directly inside your application’s UI

- Explains features, workflows, and best practices in real time

- Provides personalized guidance based on the user’s own data

- Turns your product into a smart, proactive assistant rather than a static tool

7. The Economic Impact: How Secure AI on Your Data Pays Off

Building AI that operates on internal company data delivers tangible economic benefits across cost savings, revenue growth, and risk reduction. When implemented correctly, these systems reduce operational friction, enhance customer experience, and create measurable value even in the early stages of deployment.

7.1 Cost savings

Secure, data-aware AI significantly reduces the time employees spend searching for information across fragmented tools and systems. Support and operations teams resolve issues faster, lowering average handle times and improving throughput. As internal copilots become more capable, companies rely less on a patchwork of external AI tools, reducing overlapping subscription and integration costs. These efficiencies accumulate quickly across engineering, support, product, and customer-facing functions.

7.2 Revenue and product upside

Improved customer experience is one of the strongest drivers of economic impact. Faster, more accurate responses lead to higher satisfaction and retention. In-product copilots increase engagement by guiding users through workflows, explaining features in real time, and helping them achieve value faster. These improvements contribute directly to expansion opportunities, stronger usage patterns, and lower churn. For SaaS companies, embedding AI directly into the product becomes a differentiator that boosts overall competitiveness.

7.3 Risk reduction

Keeping sensitive data within your perimeter dramatically reduces the risks associated with AI adoption. By eliminating ad-hoc copy-pasting of proprietary information into third-party tools, companies strengthen their security posture and minimize the likelihood of data leaks. Built-in auditability and governance ensure every request is tracked and explainable, which is critical for compliance-heavy industries. As AI usage grows, a secure architecture prevents shadow AI practices and maintains organizational control.

7.4 A simple ROI model

A practical way to evaluate ROI is to begin with time savings. Estimate the number of hours saved per user each month, multiply it by the number of active users, and apply your blended hourly cost. Add the expected lift in retention and expansion from improved customer experience and more proactive product guidance. Finally, subtract the infrastructure and licensing costs associated with running the AI system. Even conservative assumptions typically reveal a strong payback period when the rollout focuses on one or two high-impact use cases.

8. How Calljmp Helps Tech Leaders Build Secure, Data-Aware AI Systems

8.1 Agents as code, not configuration

Calljmp enables teams to define AI behavior entirely in TypeScript. Instead of relying on rigid visual builders or opaque automation tools, engineers write clear, version-controlled logic that integrates directly into their existing systems. This approach gives full control over data flows, orchestration, and execution while maintaining a familiar development workflow.

8.2 Secure ingestion inside your perimeter

Calljmp’s ingestion pipeline is designed for companies handling sensitive or regulated data. It:

- Processes documents inside your VPC, private cloud, or on-prem environment

- Splits content into optimized chunks

- Generates embeddings using local open-source models

- Stores only semantic vectors and metadata in your database

Original documents never leave your environment and are discarded immediately after embedding.

8.3 Built-in agentic runtime for RAG and multi-step AI

The platform includes a runtime purpose-built for multi-step reasoning and orchestration. It supports:

- Long-running agents and workflows

- External tool and API integrations

- Context management, planning, and memory

- Open-source and premium LLMs, selectable per use case

This lets teams combine retrieval, reasoning, and tool execution seamlessly.

8.4 Observability, guardrails, and HITL out of the box

Calljmp provides enterprise-grade visibility and safety mechanisms:

- Full execution traces for every request

- Logs, retries, and structured error handling

- Token usage, latency metrics, and cost insights

- Ability to enforce guardrails and human-in-the-loop (HITL) steps

Every AI decision becomes auditable and explainable.

8.5 Backend included — but not required

Calljmp includes backend primitives to help teams ship faster:

- Authentication

- Database

- Storage

- Realtime events

At the same time, companies can connect their existing backend or databases without changing their architecture. This makes Calljmp a flexible AI layer rather than a forced replacement of what already works.

9. Governance and Risk Checklist for C-Level Tech Leaders

When evaluating any AI system that interacts with internal or sensitive data, use this checklist to ensure the platform aligns with your security, compliance, and governance standards.

- Where is our data processed and stored?

- Does any sensitive data leave our perimeter, and if so, under what conditions?

- What exactly is retained long term: raw content, embeddings, metadata, or logs?

- How long are logs and traces kept, and who inside the organization can access them?

- How does the system enforce authentication, authorization, and role-based access?

- Can we fully trace how an AI answer was produced, including which data and which steps were involved?

- Are there clear guardrails, auditability mechanisms, and human-in-the-loop options?

- Can we disable or replace a model quickly if policies, risk requirements, or pricing change?

Use this checklist to evaluate any AI platform, including Calljmp, and to ensure responsible, secure, and scalable AI adoption across the organization.

Conclusion: Turning AI from Experiments into Infrastructure

Companies that succeed with AI are the ones that move beyond isolated experiments and invest in secure, repeatable systems that operate on their own data. Building AI on internal knowledge unlocks efficiency, improves decision-making, enhances customer experience, and creates a durable competitive advantage. But achieving this requires an architecture that protects sensitive information, provides deep observability, and integrates seamlessly with existing systems.

With Calljmp, tech leaders gain a platform designed specifically for this shift—from experimentation to production. By processing data inside the company’s perimeter, storing only embeddings, and defining AI behaviors as code, Calljmp gives organizations the control and flexibility needed to deploy AI safely at scale. The combination of secure ingestion, agentic workflows, and enterprise-grade observability allows companies to transform AI into a reliable part of their operational and product infrastructure.

The opportunity is clear: AI built on your own data is not just a feature—it is the foundation for the next generation of digital workflows, customer experiences, and internal intelligence. Companies that take this step now will be the ones defining how their industries operate in the years ahead.